© ROOT-NATION.com - Use of content is permitted with a backlink.

In the AI revolution, it’s hard to believe we’re witnessing something truly groundbreaking. And it’s not about politics — it’s about math. The issue lies with AI hallucinations.

Imagine a world where a calculator occasionally says 2+2=5. Or where accounting software invents transactions that never happened. Sounds absurd, right? Yet this is the kind of world we’re stepping into with today’s AI revolution.

From the very beginning of the digital era, one thing was certain — computers could crash, printers could misbehave, and users could be driven mad by blue screens of death. But through it all, they were trusted to do one thing flawlessly: perform calculations. That foundation of trust, built over decades, is now being steadily eroded by what experts are calling AI hallucinations.

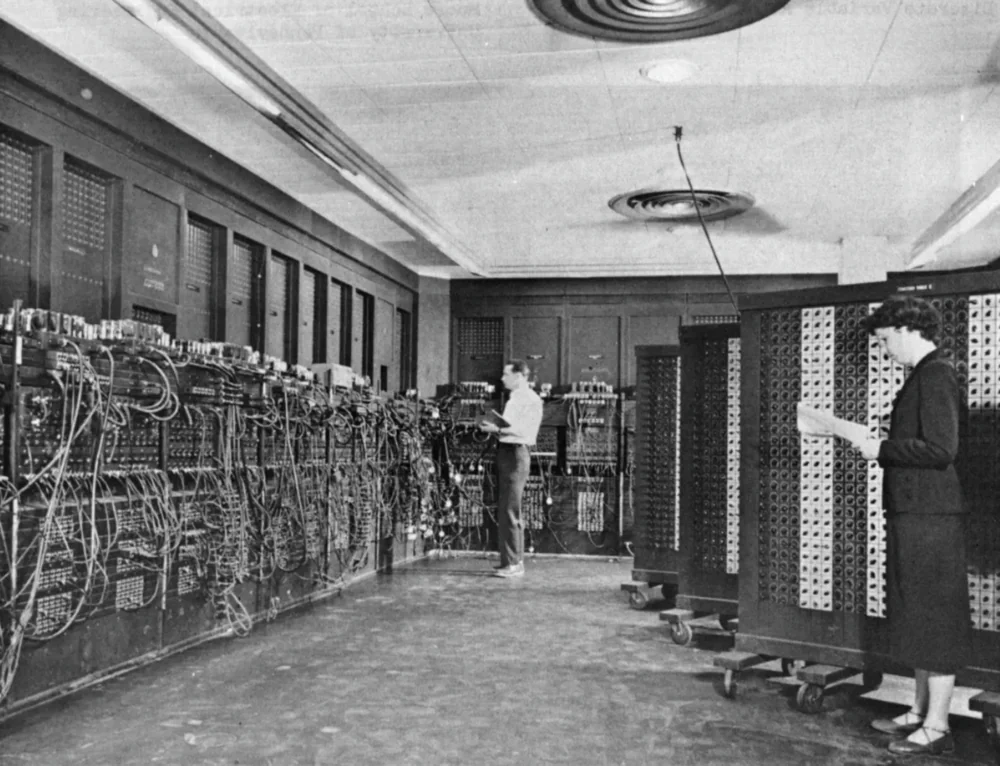

The history of computers didn’t begin with social media or video games, but with the need to perform complex calculations. The first general-purpose electronic computer, ENIAC, occupied a room measuring 7 by 13 meters and housed nearly 18,000 vacuum tubes along with thousands of relays. This massive machine was developed to calculate artillery firing tables and assist in work on the hydrogen bomb. It could perform 5,000 additions, 350 multiplications, or 40 divisions per second—faster than anything imaginable at the time.

More than 75 years have passed since then, and computers have undergone a dramatic transformation. From room-sized giants, they’ve evolved into devices small enough to fit in our pockets. Yet despite all the technological revolutions, their core purpose has remained the same—computers are, above all, machines built to calculate.

Read also: Techno-feudalism – A New Form of World Order

Excel – math you can trust

A good example of this continuity is Microsoft Excel—a program that, at its core, is still an advanced visual calculator. Over the decades, Excel has become a backbone of the global economy, used by everyone from small businesses to multinational corporations, from household budgeting to the complex financial models of Wall Street. While it has its critics and limitations, one thing has remained consistent: its calculations are reliable.

Of course, errors can occur in Excel too. A common example is the #VALUE! message, which appears when we try to perform mathematical operations on text instead of numbers. But—and this is the key difference—such errors always have clear, identifiable causes and straightforward fixes. More importantly, Excel never guesses or invents results. The formula =SUM(A1:A10) will always return the same output for the same input, whether you’re running it for the first time or the thousandth.

This deterministic nature of traditional software has reinforced our trust in computers for decades. We might have complained about user interfaces, performance, or compatibility, but we never questioned the mathematical accuracy of the results.

Read also: Panama Canal: History of Its Construction and Basis of U.S. Claims

AI hallucinations – when math starts to fantasize

And this brings us to the core issue of today’s artificial intelligence revolution. Modern AI models—particularly large language models (LLMs)—fundamentally differ from traditional software. Instead of executing specific, deterministic operations, they are designed to recognize patterns in massive datasets and generate plausible responses based on those patterns.

This fundamental shift in architecture leads to what experts call AI hallucinations—instances where AI models generate information that appears to be real but is completely incorrect or disconnected from reality. Importantly, these hallucinations are not random errors; they are a result of the very nature of these systems—the complex interaction between training data, model construction, and the way the model interprets queries.

The most concerning aspect is that AI hallucinations often appear with the same level of confidence as factual information. A model can confidently state that Kyiv is the capital of Ukraine (which is true) and that it hosted the 1995 Summer Olympics (which is entirely fabricated). For the user, especially a non-expert, it can be extremely difficult to distinguish fact from hallucination.

Read also: Use It or Lose It: How AI is Changing Human Thinking

The extent of the hallucination problem

Although it’s difficult to establish exact statistics on the frequency of AI hallucinations, experts agree that this is a widespread issue affecting all large language models. Systems most prone to hallucinations are those that lack effective information verification mechanisms, rely on outdated data, and fail to properly interpret the context of queries.

The causes of hallucinations are complex and multi-layered. Among the main factors, experts point to:

-

Imperfections in training data: If the data used to train the model contains errors, inaccuracies, or contradictory information, the model may replicate these issues or generate new, false content.

- Overfitting of the model: This occurs when the algorithm adapts too closely to the training data set, losing its ability to generalize and correctly identify new patterns.

- Faulty assumptions in model design: If AI developers base their design on incorrect assumptions, the model may consistently generate hallucinations.

Specific examples of AI systems, particularly prone to hallucinations, include Chinese solutions such as Qwen and DeepSeek. Despite their technological advancements, these models still face this issue. They often generate information that seems plausible but is actually false or not aligned with reality, especially in contexts where the data may be incomplete or contradictory.

Read also: All About Microsoft’s Majorana 1 Quantum Processor: Breakthrough or Evolution?

The problem of trust – 98% is still not enough

And here we arrive at the fundamental issue of trust. In traditional software, errors were exceptions, not the rule. In the case of artificial intelligence, hallucinations are an inherent part of the system’s operation. Even if a model generates accurate information 98% of the time, the remaining 2% is a serious problem.

Imagine using a calculator that gives the correct result 98% of the time, but in 2% of cases, it provides the wrong answer. Would we trust such a device for tasks like calculating taxes, developing medicine, or designing a bridge? The answer is clear.

The issue of AI hallucinations is particularly critical in fields that require complete accuracy and factual correctness, such as medicine, law, finance, and engineering. In these areas, even the slightest chance of an error can lead to disastrous consequences.

Read also: Tectonic Shifts in AI: Is Microsoft Betting on DeepSeek?

Excel vs. artificial intelligence: calculation vs. confabulation

In Excel, when an error like #VALUE! appears, the program clearly indicates that something went wrong. It doesn’t attempt to guess the result or hide the issue. Moreover, there are specific recommendations on how to resolve such errors — for example, ensuring that all values in a mathematical formula are numbers, not text.

On the other hand, in the case of AI systems, when the model doesn’t know the answer, it often generates a convincing but false response rather than acknowledging its lack of knowledge. The worst part is that the user might not even realize that the information provided is a hallucination.

According to Salesforce‘s report on the state of data and analytics, 9 out of 10 (almost 87%) analysts and IT leaders agree that progress in artificial intelligence has given data management a higher priority. However, the same report highlights respondents’ uncertainty about the accuracy of data and concerns regarding data security in the context of AI.

Read also: How Taiwan, China and the US are fighting for technological dominance: the great chip war

Supporters of artificial intelligence argue that the issue of hallucinations can be addressed through information verification. Indeed, fact-checking and validating the outputs of AI systems are becoming essential practices in every organization that utilizes these technologies. The problem, however, is that the need for verification significantly reduces the value of these systems.

Imagine this situation – we ask an AI assistant to write a report on the electric vehicle market. The system generates a 20-page document filled with statistics, trends, and forecasts. The document looks professional and contains convincing arguments. The problem is that we don’t know which parts of the information are accurate and which were the result of AI hallucinations. To determine this, we would need to verify every statistic, every statement, every fact. This means, in effect, we are doing the work that the AI system was supposed to do for us.

This is the fundamental paradox of the current AI revolution – systems that were supposed to save us time often require additional work to verify their results. In the case of using traditional software, like Excel, we can simply trust the calculation results and focus on interpreting the data.

Read also: All about USB standards and specifications

The mathematically imperfect world of AI

The lack of trust in AI systems is not a matter of technophobia or resistance to change. It is a rational response to a fundamental shift in the relationship between humans and computers. For decades, we built relationships based on the deterministic reliability of computing machines. Now, we are entering an era of probabilistic models that, by nature, cannot guarantee the same level of reliability.

Perhaps the most accurate way to describe this is through the analogy of human communication. Traditional software is like an encyclopedia – it may be incomplete or contain outdated information, but what is included can be considered proven. AI, on the other hand, resembles a conversation with a person who possesses impressive but imperfect knowledge – sometimes they make mistakes, occasionally they get confused, and sometimes they distort facts.

This analogy, in the context of computers, signifies a fundamental regression in terms of reliability. We have always expected more accuracy from machines than from humans. Now, paradoxically, we find ourselves needing to verify AI-generated results with the same caution we would apply to information from an unknown individual.

Read also: What is DeepSeek and Why is Everyone Talking About It?

In search of mathematical reliability

Does this mean we should completely abandon artificial intelligence? Absolutely not. AI systems have enormous potential in various fields – from generating creative content to analyzing vast datasets. The problem lies in the fact that we must learn a new approach to working with these systems, one that acknowledges their fundamental limitations.

Currently, intensive research is underway to reduce AI hallucinations. Proposed solutions include improving the quality of training data (the better the data, the lower the likelihood of hallucinations), developing more transparent training methods (models that are understandable and explainable are less likely to generate false information), and advancing fact-checking mechanisms (systems that automatically verify generated content against authoritative sources).

Read also: Biomimicry: How Nature Inspires Engineers to Innovate

New ethics of digital reality

The current revolution in artificial intelligence requires not only new tools and methodologies but also a new digital ethics framework. We need to rethink what trust between humans and machines means, the boundaries of responsibility for AI-induced errors, and how to protect ourselves from misinformation in a world where the line between fact and fiction is becoming increasingly blurred.

According to a Salesforce report exploring data trust in the age of AI, security risks and a lack of data harmonization hinder its reliability. Therefore, companies that use AI-based data for critical decision-making consider data security threats to be their biggest concern.

This is especially important in the context of so-called generative artificial intelligence, which carries the additional risk of company data leaking into public large language models.

Read also: 10 Examples of the Strangest Uses of AI

Instead of a thousand words…

I don’t distrust the current AI revolution because I don’t see its potential, but because I understand its fundamental limitations. For decades, we have built our digital civilization on the foundation of reliable calculations—starting from the earliest mechanical calculators, through the monumental ENIAC, to the ubiquitous spreadsheets. This mathematical certainty has been the cornerstone of progress across countless fields of life.

The current wave of artificial intelligence ushers us into a probabilistic world, where 98% certainty is the new norm. While this may be sufficient for many applications, much higher standards are required in critical areas such as healthcare, finance, and security.

The real challenge, then, lies in harnessing the potential of artificial intelligence without losing the mathematical certainty that has been the foundation of our trust in technology for decades. While computers can now converse, create images, and write poetry, their most crucial function remains flawless calculation—the same function they performed when they once filled entire rooms and were operated by teams of scientists in lab coats. Because in a world where distinguishing fact from fiction becomes increasingly difficult, mathematical certainty is more valuable than ever.

Read also:

- What passenger trains of the future will look like

- Space Travel at the Speed of Light: When Will It Become a Reality?