© ROOT-NATION.com - Use of content is permitted with a backlink.

The European Parliament has approved a draft law that will form the basis of the future law on AI regulation. Let’s try to understand what the EU AI Act.

First of all, the EU AI Act is supposed to be the first law in the world to comprehensively regulate AI-related issues. So far, only the draft has been approved, not the law itself. It will now be submitted to the parliaments of 27 EU member states for adoption. In other words, the EU is trying to become a kind of AI regulator, to set certain limits and restrictions on the development and implementation of AI and machine learning algorithms.

Read also: Robots of the future: will artificial intelligence get a body?

The European Union wants to regulate artificial intelligence

The European Union is seeking to put an end to the market confusion over the use of AI algorithms and is taking on the task of regulating new types of artificial intelligence. The European Parliament has accelerated its work on the proposed Artificial Intelligence Act, which would introduce rules for the implementation of new AI programs and even ban their use in certain areas of life.

Discussions are currently underway on the form of such regulation in view of the current development of AI. In fact, MEPs want to spell out in the law the difference between general-purpose AI and fundamental models, with the latter expected to be subject to stricter laws. This modification of the previously proposed provisions is due to the rapid development of AI in recent months. Both forms of artificial intelligence differ from each other in terms of the scale of training data, adaptability, and the likelihood of being used for unforeseen purposes.

All of these parameters are higher in fundamental models, especially in generative AI, which is the basis of such models as GPT-3 and GPT-4. They process a huge amount of data that is constantly expanding as they work. Therefore, they can quickly learn new data that may be used in a completely different way than the creators intended.

Based on the new recommendations, a three-stage division of groups will be developed based on the potential threats that AI can cause. The highest levels of risk will mean restrictions on the use of machine learning technologies in sensitive sectors, and in some cases even a complete ban.

After the introduction of the AI Law, the developer of the technology will have to meet a number of conditions before it can be released to the market, such as checking it for democratic or security threats under the supervision of independent experts, meeting transparency requirements, or disclosing the model’s computing power. At the same time, fundamental models would not be allowed to be used to create social scoring systems (as in China) or biometric identification.

Read also: What are 6G networks and why are they needed?

What is the EU Artificial Intelligence Act?

The EU AI Act is a proposed law on artificial intelligence by the European Union and is the first AI law to be adopted by a major regulatory body. The law will regulate the use of AI according to three risk categories. Firstly, applications and systems that create unacceptable social risks, such as the state social assessment used in China, will be prohibited. Secondly, high-risk applications, such as CV scanning tools that rank job applicants who must meet certain legal requirements. Finally, applications that are not explicitly banned or listed as high-risk, and which are largely unregulated.

The European Union is working on an AI Act, i.e. laws and regulations for the use of artificial intelligence aimed at limiting the harm it can cause to humans.

This is an approach based on common ethical values that are important to the Union, which puts it in opposition to the Chinese approach – based on total state control and little regard for individual rights – and in opposition to the American model, which ignores the rights of the most vulnerable citizens.

The European Commission aims to create a European regulatory environment for artificial intelligence principles. The aim of the Artificial Intelligence Act is to regulate the technology market not by the type of service or product, but by the level of risk that AI may pose.

Why do we need AI regulations?

The proposed AI regulation ensures that Europeans can trust what AI has to offer. While most AI systems pose little or no risk and can contribute to solving many societal problems, certain AI systems pose threats that we must consider to avoid undesirable outcomes.

For example, it is often impossible to ascertain why an AI system made a certain decision, made a prediction, or took a certain action. It can thus become difficult to assess whether a person has been unfairly disadvantaged, for example, in a hiring decision or in an application for a public improvement programme.

While current legislation provides some protection, it is not sufficient to address the specific challenges that AI systems can pose.

The following rules were proposed:

- eliminate risks created specifically by AI applications

- offer a list of high-risk applications

- establish clear requirements for AI systems for high-risk applications

- define specific obligations for users of AI and providers of high-risk applications

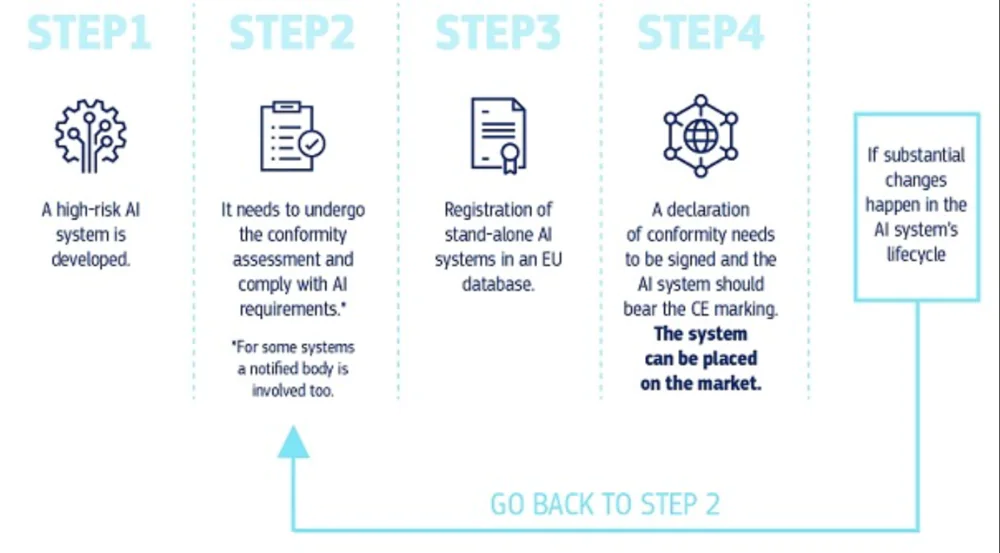

- propose a conformity assessment before an AI system is put into operation or placed on the market

- suggest areas of application after such an AI system is placed on the market

- propose a governance structure at the European and national levels.

Read also: The best tools based on artificial intelligence

Four levels of risk in AI

Lawyers of the European Union have defined a legal framework that defines 4 levels of risk in AI:

- Unacceptable risk

- High risk

- Limited risk

- Minimal or no risk

Let’s take a closer look at each of them.

Unacceptable risk

As the name implies, this degree of risk is unacceptable in the development and use of AI algorithms. That is, all AI systems deemed to be a clear threat to human security, livelihoods, and rights will be banned, from social assessments by governments to voice-assisted toys that encourage dangerous behaviour.

High risk

AI systems used in the following areas were classified as high-risk:

- Critical infrastructure (e.g. transport) that may endanger the life and health of citizens

- Educational or vocational training, which can determine access to education and a person’s professional path (e.g., scoring exams)

- Product safety components or the use of artificial intelligence, for example, in surgery

- Employment, employee management and access to self-employment (e.g. CV sorting software for recruitment procedures)

- Basic private and public services (e.g., creditworthiness assessments that deny people the opportunity to obtain credit)

- Law enforcement actions that may restrict people’s fundamental rights to justice (e.g., assessing the reliability of evidence)

- Management of migration, asylum and border control (e.g. authentication of travel documents)

- Administration of justice and democratic processes (e.g. application of the law to a particular set of facts).

It has been determined that high-risk AI systems will have to meet stringent requirements before they can be brought to market:

- Adequate risk assessment and mitigation systems

- High quality data sets feeding the system to minimise risks and discriminatory outcomes

- Registration of activities to ensure tracking of results

- Detailed documentation containing all relevant information about the system and its purpose to enable authorities to assess its compliance

- Clear and adequate information for the user

- Appropriate human oversight measures to minimise risksHigh levels of reliability, security and accuracy.

Now, let’s talk about one of the most important aspects that scared almost everyone. All remote biometric identification systems are considered to carry high risks and are subject to strict requirements. The use of remote biometric identification in public places for law enforcement purposes is prohibited in principle.

Strictly defined and regulated exceptions are provided for, for example, when it is necessary to search for a missing child, to prevent specific and imminent terrorist threats, or to detect, locate, identify or prosecute a criminal or suspected criminal offender.

Such use requires the authorisation of a judicial or other independent authority and is subject to appropriate time limits, geographical coverage and databases searched.

Limited risk

Limited risk refers to AI systems with certain transparency obligations. When using AI systems such as chatbots, users need to know that they are interacting with a machine so that they can make an informed decision whether to continue or back out.

This means that no one is going to ban ChatGPT, but developers should disclose the details of their work and future plans. Users should understand the risks they face if they rely on the reliability of the information received from chatbots.

Minimal or no risk

The European Commission allows the free use of AI with minimal risk. This includes applications such as AI-enabled video games or spam filters. The vast majority of AI systems currently in use in the EU fall into this category.

When an AI system enters the market, authorities are responsible for market supervision, users provide human supervision and monitoring, and suppliers have a post-marketing monitoring system. Suppliers and users will also report serious incidents and malfunctions.

Read also: 7 coolest ways to use ChatGPT

Why should you care?

AI programs influence what you see online by predicting what content you are interested in, collect and analyse data to enforce laws or personalise ads, and are used to diagnose and treat, for example, cancer. In other words, AI is affecting many areas of your life.

Similar to the EU’s 2018 General Data Protection Regulation (GDPR), the EU Artificial Intelligence Act could become the global standard for determining whether AI will have a positive, rather than negative, impact on your life, wherever you are. AI regulation in the EU is already causing a certain international outcry. At the end of September 2021, the Brazilian Congress also passed a bill that creates a legal framework for the use of artificial intelligence. It still needs to be approved by the country’s Senate.

Future-oriented legislation

As artificial intelligence is a rapidly evolving technology, the proposal takes a forward-looking approach, allowing the rules to adapt to technological changes. AI applications must remain reliable even after they have been placed on the market. This requires vendors to manage quality and risk on an ongoing basis.

Such a law should force manufacturers and suppliers of AI algorithms to be more responsible for their developments. To be more social, not just thinking about revenue growth and uncontrolled experiments.

EU AI Act – the first regulation of artificial intelligence

The EU Artificial Intelligence Act is set to become the first law in the world to comprehensively regulate artificial intelligence.

This is a revolutionary solution that should regulate artificial intelligence based on its potential to cause harm. It will be prohibited from being used, for example, in social assessment systems, real-time biometric identification, or subconscious manipulation. A group of high-risk applications has also been identified, such as in medical diagnostics or the judicial system. In the group of low-risk systems, only transparency obligations should be established.

There are several loopholes and exemptions in the proposed law. These shortcomings limit the law’s ability to ensure that AI remains a force for good in your life. Currently, for example, facial recognition by the police is prohibited unless the image is captured with a delay or the technology is used to find missing children.

In addition, the law is inflexible. If a dangerous AI application is used in an unanticipated area in two years’ time, the law does not provide a mechanism to label it as “high-risk.”

The AI act is primarily about transparency, the ability to explain events and the ability to account for them, i.e. the ability to determine who or what is responsible for a particular event. The AI Act should also provide a legal basis for the creation of a new Artificial Intelligence Authority to assist member states with technical issues.

Read also: From CUDA to AI: the secrets of NVIDIA’s success

Tech giants will pay dearly for breaching EU artificial intelligence laws

The proposed directive would only apply to claims for damages caused by the result of the use of AI, or where the AI system failed to produce the result due to the fault of a person, such as a supplier or user. However, the Directive does not apply to civil liability claims where the damage was caused by a human’s assessment and then his or her actions or inactions, while the AI system only provided information or advice that was taken into account by the relevant actor. In the latter case, it is possible to trace the damage to human actions or inactions, as the output of the AI system is not inserted between the human actions or inactions and the damage.

Violation of the ban will result in financial penalties for suppliers of certain technologies. They can reach up to €30 million or 6 per cent of a company’s annual global revenue.

Of course, such decisions will depend on the decisions of the EU member states, but we can already say that this is a truly historic step. Artificial intelligence technology has enormous potential that can be used positively for economic development. At the same time, there are many risks. That is why legislation is trying to limit them, but not to ban them.

In addition to adopting new legislation on artificial intelligence, the EU also intends to work with the US to create a voluntary code of practice on the technology, which is expected to be ready soon and could be offered to other countries that adhere to similar principles.

The Artificial Intelligence Law should also provide the basis for the establishment of an Artificial Intelligence Authority. It is intended to support Member States and their authorities in implementing the new regulation through technical expertise, as well as to facilitate coordination of solutions in the event of problems affecting more than one country.

Read also: