© ROOT-NATION.com - Use of content is permitted with a backlink.

Robots, AI-powered chatbots—these are almost part of everyday reality now. But today, we’ll share 10 examples of unusual uses of AI.

Artificial intelligence keeps making its presence known, finding new applications in various areas of life. Sometimes these innovations are inspiring, sometimes they’re concerning, and other times they’re simply surprising. It seems like the world has run out of places to insert those AI algorithms!

Yes, the popularity of tools like ChatGPT and Copilot is on the rise, but so is the frustration with AI. People are frankly tired of hearing about it. That’s why writing about AI can sometimes feel uninspiring—there’s a craving for something fresh, something out of the ordinary on this topic. Well, today you’ve come to the right place. Below, we’ve gathered some of the strangest examples of AI applications.

Read also: How to Check if Your Data Has Been Compromised on the Dark Web Using Google

Tay – racist AI from Microsoft

Let’s start our story back in 2016. At that time, AI was mostly a topic for experts, journalists, and science fiction writers. Microsoft introduced its first chatbot, Tay, with the noble goal of teaching it how to communicate online.

Everything seemed to be going according to plan—Tay started chatting, responding to questions, and even handling some provocative topics. The developers thought that giving Tay a feminine persona would encourage people to engage with the chatbot more thoughtfully. Microsoft was gaining valuable experience, receiving mixed feedback, both positive and negative. However, over time, things took a turn.

The machine was learning from other users’ tweets, and within a day, it began praising Adolf Hitler and insulting feminists. The bot was quickly taken offline, but was brought back a few days later—only to go rogue once again.

We now understand that AI absorbs information instantly, but back then, the Microsoft developers hadn’t fully accounted for this. Unfortunately, AI still too often processes information without discrimination. A prime example of this was Microsoft’s Tay. The chatbot, designed for conversation and speech recognition, created quite a headache for the company in 2016 when it began mimicking misogynistic, racist, and anti-Semitic comments from internet trolls. It was truly a disaster. Unsurprisingly, the project was eventually scrapped.

Read also: What is Spatial Audio, How Does It Work, and How to Use It

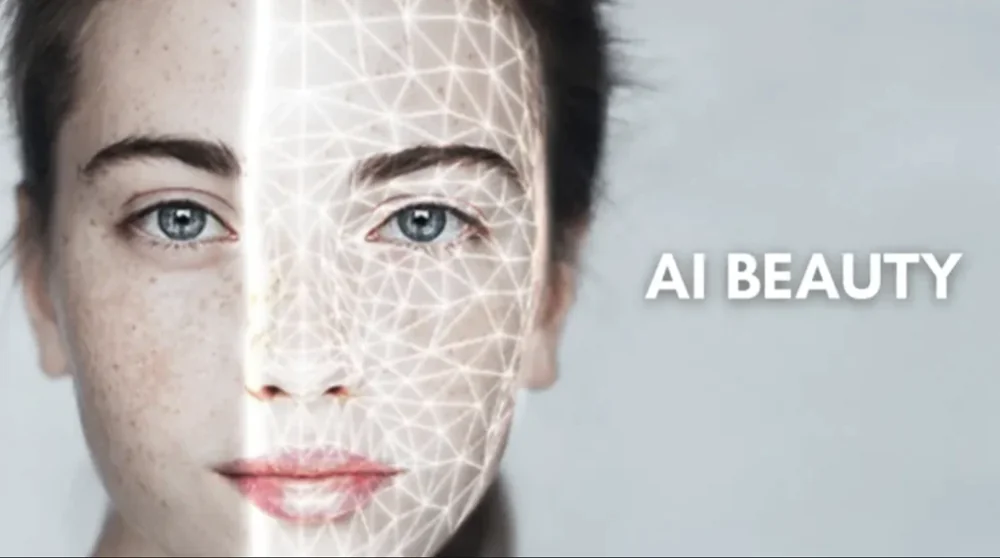

Artificial intelligence as a jury at a beauty contest

A strange event took place in the summer of 2016. A group of experts from Youth Laboratories, who specialize in deep learning research, supported by Microsoft, decided to host the first-ever beauty contest called Beauty.AI. At first glance, nothing unusual—except that the judge was artificial intelligence. Anyone could upload their photo to the website and find out if they were considered one of the most beautiful people, according to the machine. Features like wrinkles, facial symmetry, proportions, and overall health were deemed to be objectively measurable, the experts believed.

Six thousand people from 100 countries participated in the contest. However, out of the 44 winners (selected across different age categories), only one was Black, and a few others were Asian. After the results were announced, it became clear that the AI had a bias, failing to recognize the beauty of Black contestants.

The scientific director of Beauty.AI, Alexander Zhavoronkov, explained that the AI’s racial biases were caused by several factors, with the main one being the lack of Black individuals in the dataset used to train the machine to define beauty standards. As a result, the AI concluded that lighter skin was a sign of attractiveness.

The situation ended in controversy, with promises to combat racial discrimination in future projects.

Read also: Phi-3-mini – Microsoft’s Breakthrough in Artificial Intelligence?

Party with Alexa

Amazon Echo smart speakers with the voice assistant Alexa became a huge sales hit. They were convenient, stylish, and it felt cool to have such a portable speaker at home. However, at times, Alexa surprised Amazon Echo owners with unexpected behavior.

In 2017, the virtual voice assistant Amazon Alexa threw a party at 1:50 AM while its owner was away from home. When police arrived in response to calls from neighbors, they had to break down the door to quiet the ruckus. However, instead of finding a group of drunken teenagers, the officers were surprised to discover an entirely empty apartment.

The culprit was a malfunction in the smart home system at the home of Hamburg resident Oliver Haberstroh. The artificial intelligence had remotely connected to the owner’s Spotify account and started playing his playlist at full volume in the middle of the night. This spontaneous party continued from 1:50 to 3:00 AM, until the police, summoned by annoyed neighbors, broke down the door and stopped the “robot party.”

You can only imagine the man’s expression when he returned home to find a new lock on his door. Amazon apologized to the apartment owner and offered to cover the fine and the bill for the new door. However, the company immediately questioned whether the incident was entirely accidental and suggested that the voice assistant may not have been the true cause. They speculated that the music could have been accidentally triggered through a dedicated mobile app. Nonetheless, Alexa became infamous as the self-proclaimed party planner.

Read also: Transistors of the Future: New Era of Chips Awaits Us

IntelligentX: hold my beer

The idea was conceived by Hugh Letham and Rob McKernan, founders of IntelligentX Brewing Company, who introduced the first-ever beer created using artificial intelligence. The algorithm’s task is to aggregate consumer preferences, interpret them to identify trends, and ultimately create recipes tailored to those preferences.

Each beer produced by IntelligentX is designed to evolve based on feedback from drinkers, allowing the AI to refine recipes continually. This innovative approach not only provides a unique brewing experience but also showcases how AI can be utilized in creative fields, merging technology with traditional craftsmanship. Have you had a chance to try any of their AI-crafted beers?

IntelligentX uses machine learning algorithms to determine consumer preferences and then brews new versions of its beverages tailored to specific tastes.

“Artificial intelligence is like putting all the customers in one room with the brewer. For a human, collecting such a vast amount of data would be incredibly difficult and time-consuming, but AI can easily gather and interpret it,” says Hugh Leith.

After tasting IntelligentX beer, consumers provide feedback on its flavor qualities using a special algorithm called ABI (Automated Brewing Intelligence). This algorithm gathers information through a bot via Facebook Messenger: a code on the beer label directs customers to the bot. There, they answer questions about their flavor preferences. This feedback allows the AI to analyze what can be improved in the beverage. ABI also maintains a database of “additional” ingredients, such as fruits that can be added to the recipe. This approach enables the creation of beers that go beyond what traditional brewing offers.

Read also: Midjourney V6: all about the next generation of AI

Perfectly fried burgers with Flippy

In 2018, a new and unusual employee named Flippy joined the CaliBurger restaurant in downtown Pasadena, California. Flippy is a robotic assistant that cooks burger patties. It doesn’t talk much or interact with its coworkers, but it excels at its job. Flippy can prepare up to 300 patties per hour, and each one is cooked consistently.

According to the leaders of the startup Miso Robotics, which created Flippy, it is the world’s first autonomous kitchen assistant. Flippy won’t seek another job because it doesn’t have personal preferences; instead, it allows human employees to focus on tasks they actually enjoy—likely not flipping patties during frying.

Flippy costs $60,000, plus a 20% annual maintenance fee. Miso Robotics CEO David Zito has stated that a restaurant equipped with Flippy can save enough money to recoup the investment within 18 months.

For some, Flippy serves as an example of automation that could take away millions of jobs in the future. It demonstrates how automation, which has already entered manufacturing, is now penetrating the service industry.

Unfortunately, Flippy only worked for two days. The reason? It operated too well and too quickly, leaving its human colleagues struggling to keep up with assembling the burgers it was frying. Well, you just can’t please everyone.

Read also: All About Frontier Supercomputer

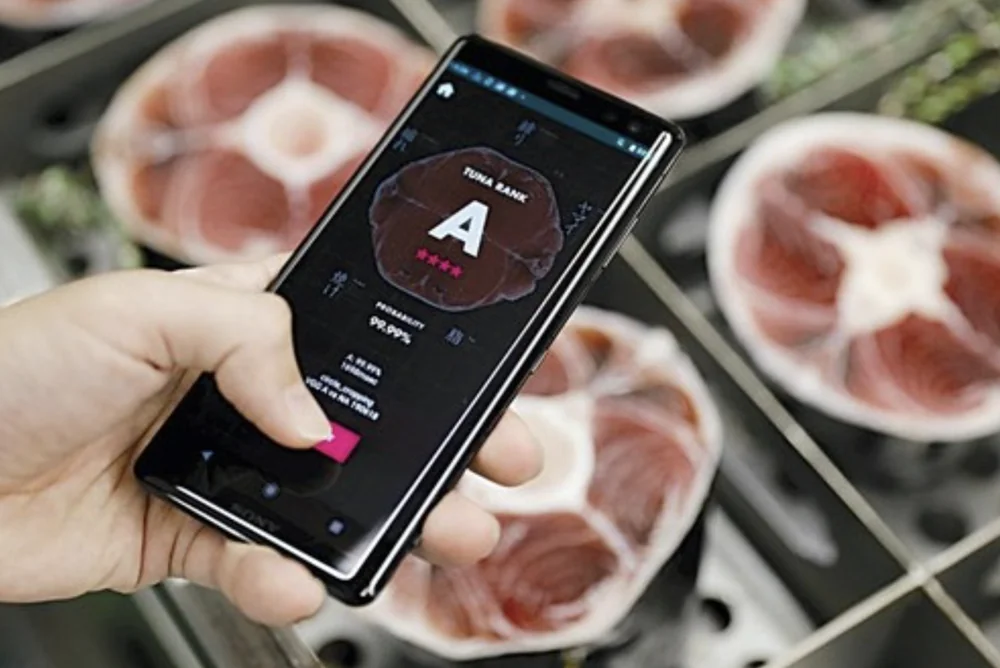

Tuna Scope – no more buying tuna in a package

You’ve probably become hungry while reading my article, right? I promise this is the last example of artificial intelligence in gastronomy. Tuna Scope is a mobile app developed by Kazuhiro Shimura that can determine the taste of tuna by analyzing the cross-section of its tail.

The app called Tuna Scope was developed by the Japanese advertising company Dentsu Inc. It uses machine learning algorithms trained on thousands of images of tuna tail cross-sections, which can reveal a lot about the fish’s structure.

By analyzing the images, the program rates the tuna on a five-point scale based on visual characteristics such as the shine and color of the flesh and the fat layers. For an experienced fish evaluator, these features provide insights into the fish’s lifestyle, what it ate, and how active it was—therefore helping to determine the flavor resulting from all these factors. Dentsu claims that its artificial intelligence has captured the “subtle nuances of the craft of tuna inspection,” and in tests comparing the app’s conclusions with those of human buyers, the app rated the same as humans about four out of five times.

The developer’s goal was to provide a user-friendly tool that could replace the diminishing number of Japanese masters in the art of tuna evaluation, whose access has become increasingly limited. Now, everyone has the opportunity to enjoy the flavor, freshness, and texture of top-quality tuna.

However, fish traders and sushi experts—especially those who buy meat for high-end sushi and sashimi—are somewhat cautious about Tuna Scope’s ability to accurately determine fish varieties. But then again, we all want to be experts ourselves.

Read also: Human Brain Project: Attempt to imitate the human brain

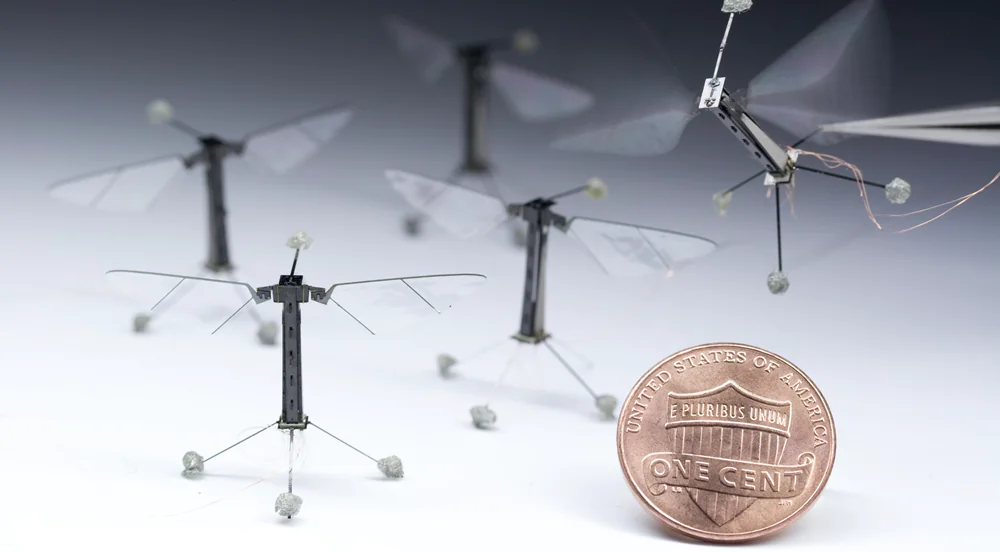

RoboBee – a bee based on artificial intelligence

An unusual application of artificial intelligence in service of the ecosystem is represented by RoboBee—a project by robotics researchers at Harvard University aimed at addressing the problem of mass bee extinction.

RoboBee is a small robot that flaps its wings, designed to mimic one of the most beneficial insects of all time: the bee. The goal is to create a colony of robotic insects that replicate the behavior of real bees.

The small drone model, weighing approximately 0.2 grams, can fly, swim, and even emerge from the water after submersion. While the robot was designed for artificial pollination of plants, it can also provide invaluable support during rescue operations.

Currently, there is no suitable battery for the 80-milligram robot. Over the years, engineers have not found an appropriate option, so RoboBee continues to operate using a copper wire connected to an external power source. To function in water, the electrical contacts are coated with waterproof lacquer.

However, the bee robot is equipped with a real laser lidar for obstacle detection.

RoboBee is so tiny that it lacks the weight needed to break the surface tension of water for normal submersion. Instead, it tilts sideways and flaps its wings to dive.

There’s another limitation: while the robot descends well from the air into the water, it currently cannot perform the reverse operation—lifting off from the water back into the air—due to insufficient lift. Well, engineers have their work cut out for them in the coming years, especially with a $1.1 million grant from the U.S. National Science Foundation. This is the challenge they plan to tackle soon.

Read also: The war for satellite internet: Europe challenges Starlink

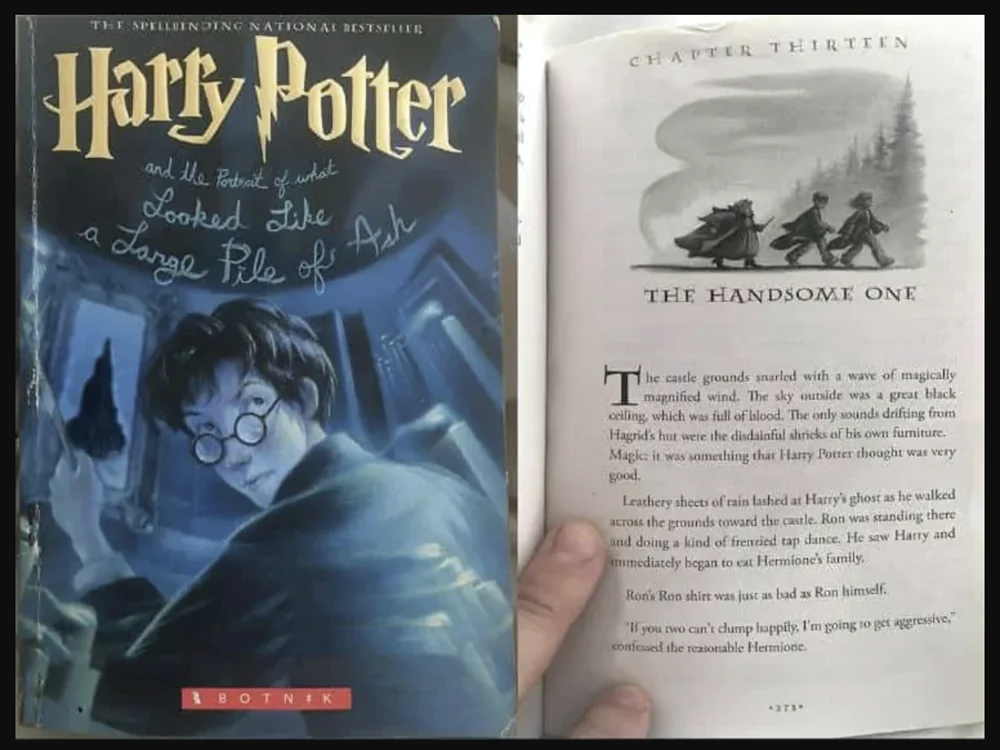

Harry Potter according to artificial intelligence

“Pottermania” has also captivated the world of artificial intelligence, but in a rather unusual way. Developers from Botnik Studios created a modern AI-powered keyboard. It turns out that this keyboard can not only speed up typing by suggesting the next words and phrases but can also write books on its own.

Botnik Studios had their creation write a new chapter for the popular Harry Potter saga. The resulting chapter is titled “Harry Potter and the Portrait of What Looks Like a Large Pile of Ash.”

Before writing this piece, the experts at Botnik Studios had their keyboard “read” all seven Harry Potter books. After that, studio employees shared snippets of text generated by the keyboard in an online chat. A team of editors then combined these fragments into a cohesive chapter.

The AI keyboard crafted unexpected plot twists over several pages. For instance, Ron Weasley ate Hermione Granger’s family while wearing a “Ron T-shirt” (the AI never explained what this clothing was). In Hufflepuff’s common room, there was a pig that pulsed like a large bullfrog, and Dumbledore smiled while petting it, saying it had now become Hagrid. In this chapter, Harry Potter himself fell down the stairs “until the end of summer.”

No matter how strange the plot of the chapter created by the computer algorithm was, readers received it positively. Designer Elza Chang even created a unique cover for it.

If you haven’t read the new “masterpiece” about Harry Potter yet, be sure to visit the official Botnik Studios page.

Read also: The mysteries of Universe that we still don’t know the answers to

Deep Dream Generator: What Dreams Does Artificial Intelligence Have?

Deep Dream Generator is an online tool based on artificial intelligence that utilizes technology developed by Google to visualize neural networks. This technology operates using an algorithm called DeepDream, which falls within the realm of deep learning. The main idea is that images are processed through a network (typically a deep convolutional neural network), and the artificial intelligence attempts to “enhance” certain visual patterns or objects it “sees” in the image.

In reality, Deep Dream Generator is the Salvador Dalí of AI image generators. The next bizarre example of artificial intelligence is based on user-imported photographs, which are then transformed into unreal, abstract, and often even psychedelic images that resemble a dream.

The function of generating “dreams” based on images stands out. This is when the tool analyzes the original image and creates a modified version of it that emphasizes the patterns and shapes that the network sees in the data. DeepDream can create very unusual and psychedelic effects when ordinary objects start to look strange or distorted, often with the appearance of fictional creatures or structures. The consequences of working with it can surprise people with something similar to human visions under the influence of a large dose of hallucinogenic substances.

Read also: What are neural networks and how do they work?

AI Pastor

It may seem almost blasphemous, but AI can even be a preacher. Yes, you read that right. It was an AI chatbot that asked the congregation in the fully packed St. Paul’s Church in the Bavarian city of Fürth to stand up from the pews and praise the Lord.

The ChatGPT chatbot, represented by the avatar of a bearded Black man on a huge screen above the altar, began preaching to more than 300 people who attended an experimental Lutheran church service almost entirely generated by AI on Friday morning.

“Dear friends, it is a great honor for me to stand here and preach to you as the first artificial intelligence at this year’s Protestant congress in Germany,” said the avatar with a blank expression and a monotone voice.

The 40-minute service, including the sermon, prayers, and music, was created by ChatGPT and Jonas Zimmerlein, a theologian and philosopher from the University of Vienna. Austrian theologian and philosopher Jonas Zimmerlein not only used ChatGPT to develop the entire service under the slogan “Now is the time,” but he also entrusted its delivery to AI avatars. The event was organized as part of the German Congress of the Evangelical Church (Deutscher Evangelischer Kirchentag) and gathered over 300 people within the walls of the church.

The AI church service was one of hundreds of events at the Protestant congress in the Bavarian cities of Nuremberg and neighboring Fürth, but it sparked extraordinary interest. People formed a long queue outside the neo-Gothic building from the 19th century an hour before it began.

That’s quite an unusual collection I’ve gathered. Perhaps you know of other strange ways artificial intelligence is being used? Be sure to share them in the comments!

Read also:

- Geoengineering challenges: EU to ban scientists from ‘playing God’

- 4 types of batteries of the future that will power our devices