© ROOT-NATION.com - Use of content is permitted with a backlink.

What is Frontier, the most powerful and fastest supercomputer in the world, operating at the Oak Ridge National Laboratory, used for? From simulating reactors to predicting climate.

This question has a broader context. I am often asked why we build such powerful machines when each of us can have a computer. Supercomputers, of course, are very expensive machines that consume a tremendous amount of energy, but what we get from them is much more valuable. Today, I will try to explain this using the example of the Frontier supercomputer.

Read alsо: Midjourney V6: all about the next generation of AI

Why do we need supercomputers?

In short, supercomputers can process vast streams of information. Our world is changing rapidly, and humanity has created an enormous database that needs to be processed, analyzed, categorized, stored, ultimately. This helps us, for example, to develop new, cheaper, and more effective medicines, longer-lasting materials, predict climate changes for decades, and so on. Supercomputers enable the modeling of the behavior of objects we plan to build, such as thermonuclear reactors. And these are just a few examples of the applications of the fastest supercomputers. Yes, they are very expensive to produce and maintain, but it is worth it.

But let’s start with the basics. What exactly is a supercomputer? There is no precise definition yet. However, a characteristic feature of every currently operational supercomputer is its exceptionally high computing power. On the internet, you can find a simplified definition, according to which a supercomputer is a machine capable of achieving computational performance of at least 1 teraflop, i.e., 10^12 (trillion) floating-point operations per second. This is a significant amount compared to regular home computers.

If previously the speed of computers was measured in IPS (instructions per second), for supercomputers, a new unit had to be invented – FLOPS, which stands for floating-point operations per second. The higher this number, the more powerful the computer.

Certainly, an approach based on a specific computational threshold quickly becomes outdated as the performance of the processors, which are the heart of these machines, constantly evolves and grows. With every release of new processors, the performance of modern computers also increases. However, building them is very challenging and financially demanding.

Read also: OpenAI’s Project Q: Breakthrough or Looming Threat?

Which supercomputers are the fastest?

We have the latest data on this matter. In November 2023, the website Top500.org, which has been regularly publishing rankings of the performance of the most powerful computing machines on Earth for many years, released its latest, 62nd edition of the Top500 list. This edition showcases the 500 most efficient supercomputers currently in existence.

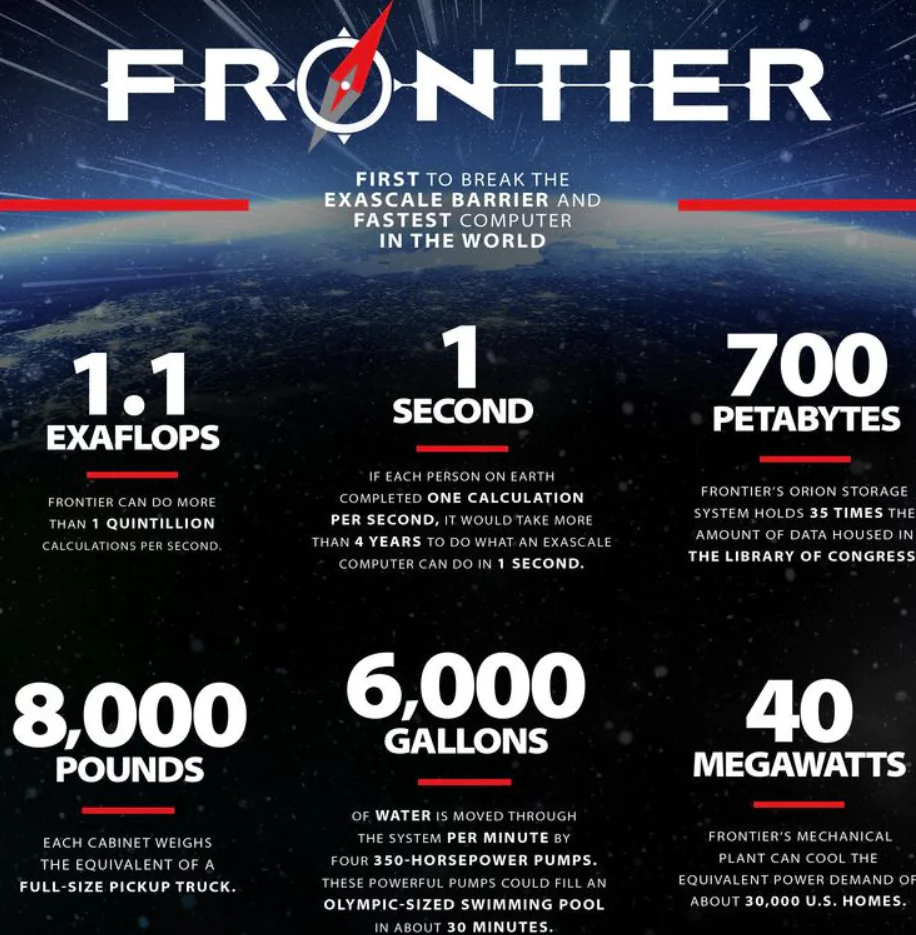

The Frontier system remains the most efficient supercomputer on the planet to date. This supercomputer is the world’s first exascale computing system, meaning its processing speed has exceeded 1 exaflop. Located at the Oak Ridge National Laboratory in Tennessee, USA, Frontier is the current leader with an HPL (High-Performance LINPACK – a specific test that assesses the initial performance of supercomputers) score of 1193 EFlops (an exaflop is a quintillion, or 10^18, floating-point operations per second).

For comparison, the speed of the most powerful Apple M1 Ultra chip is approximately 21 teraflops (one trillion FLOPS). The number is indeed substantial. Interestingly, back in 2002, there was a computer that achieved an even higher speed – the Earth Simulator supercomputer by the Japanese technological giant NEC Corporation. Its speed was around 36 teraflops!

Frontier utilizes AMD EPYC 64C 2 GHz processors and is based on the state-of-the-art HPE Cray EX235a architecture. It is assembled with 9,408 AMD EPYC processors and 37,632 AMD Instinct MI250x accelerators, totaling 8,699,904 processor and graphics cores. Additionally, Frontier boasts impressive energy efficiency at 52.59 GFlops/watt and employs the HPE Slingshot 11 network for data transfer. During testing, it has demonstrated the capability to deliver up to 1.1 exaflop, with a peak performance reaching a record-breaking 1.686 exaflops. The estimated cost of the system is around $600 million.

This is the first and currently the only supercomputer in the world operating in flops mode. Frontier claimed the top spot a year ago and, as of the June 2023 rankings, has maintained its position, fending off any challengers. As of the beginning of 2024, the American supercomputer remains the most productive in the world.

However, scientists are already speculating that, perhaps in some time, it might have to concede its lead to another American machine: the Aurora supercomputer.

According to the latest rankings, the new Aurora supercomputer, equipped with Intel Sapphire Rapids chips, operating at the Argonne Leadership Computing Facility in Illinois, USA, secures the second position with an HPL performance of 585.34 PFlops. Although this is only half the productivity of the top-ranking Frontier supercomputer, the Aurora system is still incomplete, and only half of the planned final system is currently operational. After completion, the projected performance of Aurora is likely to exceed 2 EFlops. The Intel-built Aurora supercomputer is based on the HPE Cray EX architecture – Intel Exascale Compute Blade, utilizing Intel Xeon CPU Max Series processors and Intel Data Center GPU Max Series accelerators. Similar to Frontier, it relies on the HPE Slingshot-11 network for data transfer.

The third most efficient supercomputer is the new system named Eagle, installed in the Microsoft Azure cloud in the USA. It’s a technological curiosity, as Eagle is a cloud-based supercomputer, and its third position in the Top500 ranking is the highest ever achieved by a cloud-based system. Microsoft Eagle boasts an HPL performance of 561.2 PFlops, with Intel Xeon Platinum 8480C processors and NVIDIA H100 accelerators serving as the computational core.

It’s worth mentioning the Japanese supercomputer Fugaku, whose performance is more than three times less than that of Frontier, and one of the most productive supercomputers in the past, LUMI. Although the Chinese are currently working on something powerful, it’s all still in perspective.

Read also: What is Gemini: All about Google’s new AI model

Why do we need such huge computing power?

You can admire the efficiency of the fastest machines on Earth, but the benchmarks they achieve are not about breaking records; they are about obtaining desired calculation results in the shortest possible time. No supercomputer user employs these machines for writing emails or creating presentations. Using such machines for tasks that we can perform on our home devices would be a dreadful waste. After all, the computational potential of supercomputers allows for actions that are beyond the reach of ordinary computers.

We’re talking about tasks primarily aimed at bringing tangible benefits, and not necessarily ones that can be immediately converted into monetary gains. It’s worth reminding that the creation of the Frontier supercomputer alone cost approximately 600 million US dollars. Of course, its usage and ongoing maintenance expenses are also significant. The supercomputer occupies an area of 680 square meters and consumes 21 MW of electricity.

Frontier, like other supercomputers, is used for tasks such as climate modeling, research and development of essential drugs for humanity, exploration of new materials technologies, and more. Of course, the range of tasks performed by this type of equipment is much broader and includes, for example, analyzing vast datasets of various types: financial, medical, satellite images, modeling physical phenomena, and many others. The fact that Frontier is the first exascale supercomputer allows it to tackle tasks that would be challenging for even other supercomputers.

An example could be the simulation of the entire life cycle of a thermonuclear reactor. Yes, that’s not a mistake. There is currently no operational thermonuclear power plant in the world, but the powerful exascale computational capacity of Frontier allows for modeling the behavior of a hypothetical thermonuclear reactor and predicting the behavior of plasma within such a reactor. While commercializing thermonuclear energy is still a distant prospect, it’s challenging to envision the immediate benefits. However, there is no doubt worldwide that computational power contributing to the creation and advancement of thermonuclear energy, ultimately eliminating global energy shortages, is worth any investment.

Read also: Microsoft Copilot: Game-Changer or False Path?

Supercomputers and medicines

One of the applications of supercomputers involves complex and energy-intensive calculations related to the structure of chemical substances and organic compounds. The meticulous analysis of the behavior of individual substances, made possible by simulating the behavior of a given compound at a highly accurate level, serves as a tool for developing new and more efficient drugs.

Back in 2020, before the launch of the Frontier supercomputer, scientists at the Oak Ridge National Laboratory, where the Summit supercomputer was in operation (Summit was the most efficient supercomputer in 2018, still in use, and ranks 7th in efficiency with 148.8 PFlops), utilized it for developing a new type of cancer drugs. The new drug turned out to be approximately 10% better and more effective in treatment than previously used medications. While 10% may not seem like a significant improvement, consider telling that to the patients whose lives were saved thanks to this “small” difference. While a person’s life is priceless, any drug with higher efficacy can save significant resources in the healthcare system and reduce treatment costs. This is where Frontier’s capabilities, surpassing those of the Summit supercomputer, can prove invaluable.

Read also:

- Self-driving cars: how long to wait for revolution?

- Human Brain Project: Attempt to imitate the human brain

Frontier and the new climate model

Modeling the climate involves analyzing and substantiating models of Earth’s millennia-long history. Naturally, this is a task that demands a tremendous amount of computational resources. The more accurately we can model climate changes and predict long-term perspectives, the faster and more powerful supercomputers we require.

Mark Taylor from Sandia National Laboratories, one of the researchers working on the world’s first long-term climate forecasts using exascale computing, once remarked: “The unique computational architecture of the Frontier supercomputer allows us to do things we couldn’t do before.” In other words, the computational power of this machine reduces computational work that used to take years to just a few days. Simultaneously, this enables researchers to obtain detailed assessments of the long-term consequences of climate change and extreme weather conditions. “This is a new gold standard for climate modeling,” Taylor adds.

What are the advantages? They are simply enormous. The ability to predict catastrophic weather changes can save tens of thousands, if not millions, of lives. Analyzing the potential impact of climate warming on global and regional water cycles allows us to prepare for future changes in advance. While there are modern spatial models, they are extremely computationally demanding. Until recently, they were too demanding. Yes, they enable the theoretical prediction of the complex interaction between various elements that make up what we call weather or climate, such as convective movement accompanying cloud formation, but dealing with the enormous flow of information and performing calculations of incredible complexity has been a challenge.

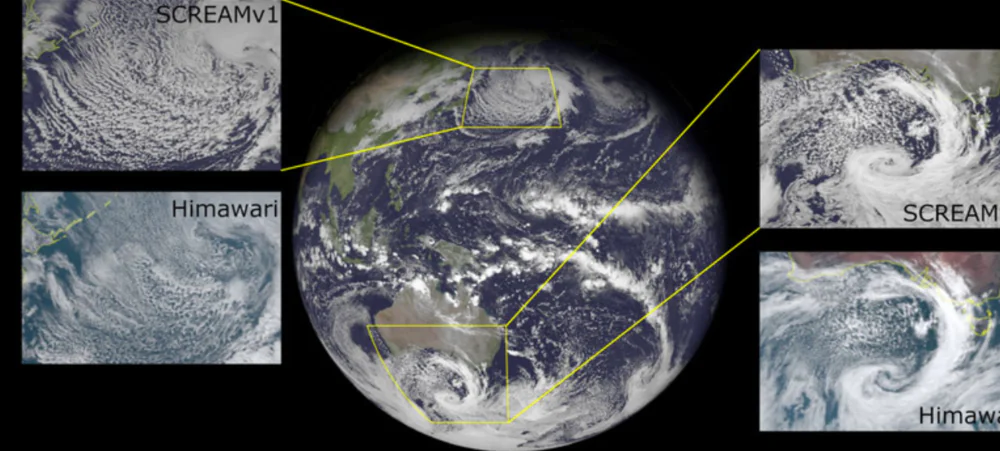

The presence of the Frontier supercomputer has already changed this. The Energy Exascale Earth System Model (E3SM) project overcomes these obstacles by combining new software approaches with immense exascale performance. Sarat Sreepathi, co-author of the research and coordinator of the E3SM project, as well as co-author of the E3SM atmospheric model called SCREAM, explains: “The climate modeling community has long dreamed of running kilometer-scale models at a speed sufficient to facilitate decade-scale predictions, and now it has become a reality.”

In other words, a project like E3SM would have failed without Frontier due to a lack of data processing capacity. However, it’s worth noting that not only the basic computing power is crucial, but also the optimization of the models themselves. Peter Caldwell, a climatologist at Lawrence Livermore National Laboratory, and his team have spent the last five years creating a new cloud model from scratch. It will efficiently run on graphics processing units (GPUs), which are now a crucial component of the computing power in modern supercomputers. This also includes an interesting top-of-the-line machine in its class.

Imagine that! Adapting the code to work on graphics processing units (GPUs) has led to a significant increase in productivity. SCREAM can run on 8192 nodes of Frontier to simulate global cloud formations that would typically take over a year (1.25 years to be precise) in one 24-hour computing session. Long-term simulations covering 30-40 years can be accomplished on Frontier within a few weeks. Researchers note that conducting similar calculations was practically impossible in the past. Those interested in learning more about these studies can explore Mark Taylor and his team’s recent scientific publication.

Read alsо:

- The war for satellite internet: Europe challenges Starlink

- What are neural networks and how do they work?

Edge and material technologies

One of the interesting projects that leverages the exascale potential of the Frontier supercomputer is the work in materials science. It involves one of the largest computational simulations in history of an alloy, achieving an accuracy that approaches quantum levels, thanks to its computational efficiency. Conducted by Vikram Gavini from the University of Michigan, this research is innovative in that it uses Schrödinger’s equation to model the behavior of various materials. The immense computational power of Frontier enabled the simulation of a magnesium alloy system consisting of 75,000 atoms.

Magnesium alloys are a very intriguing material. They are exceptionally lightweight and, at the same time, strong. However, magnesium alloys are sensitive to specific defects, mainly dislocations (meaning massive defects in the crystalline structure of the material), which dramatically alter their mechanical properties. This turns a promising material into a piece of metal unsuitable for use because it easily cracks, is brittle, and unstable. Thanks to the computational power of Frontier, scientists have managed to address some of these defects in magnesium alloys. But this is just the beginning of challenging work.

Read alsо:

- Geoengineering challenges: EU to ban scientists from ‘playing God’

- What is Li-Fi? The new fast wireless internet is near

Is the future in supercomputers?

Ordinary computers are unable to tackle the challenges posed by some scientific and technical problems. Supercomputers have significantly greater computational power, enabling them to perform calculations that would be impossible or take too much time on regular computers. By creating supercomputers, scientists aim to push the boundaries of our knowledge and capabilities, addressing problems that could have a significant real-world impact on our future.

Moore’s Law states that the computational power of computers increases in a geometric progression. Every two years, the speed of calculations doubles, allowing engineers and scientists to find solutions to tasks that were once considered unattainable.

One day, supercomputers will become commonplace. Just a decade ago, concepts like virtual reality, cloud gaming, and the metaverse existed only in the imagination of a few tech experts and geeks, and today, they are our reality. The future generation won’t even have to pursue an IT education or work at Google to enjoy the benefits of supercomputers; quietly and seamlessly, they will become part of everyday life, much like smartphones, laptops, and other technologies.

Read also: