© ROOT-NATION.com - Use of content is permitted with a backlink.

Google has just released its most powerful AI model named Gemini. What is it and what can it do? Find out everything in our article.

Google has been developing its own artificial intelligence tools for many years. With the public release of OpenAI’s ChatGPT-3, the company that manages the world’s most popular search engine, Google also created a similar tool called Bard. However, this tool was still behind its competitors, which was unacceptable for Google. Therefore, it’s not surprising that the world has recently seen Gemini, a new language model from Google, as we learned from the presentation.

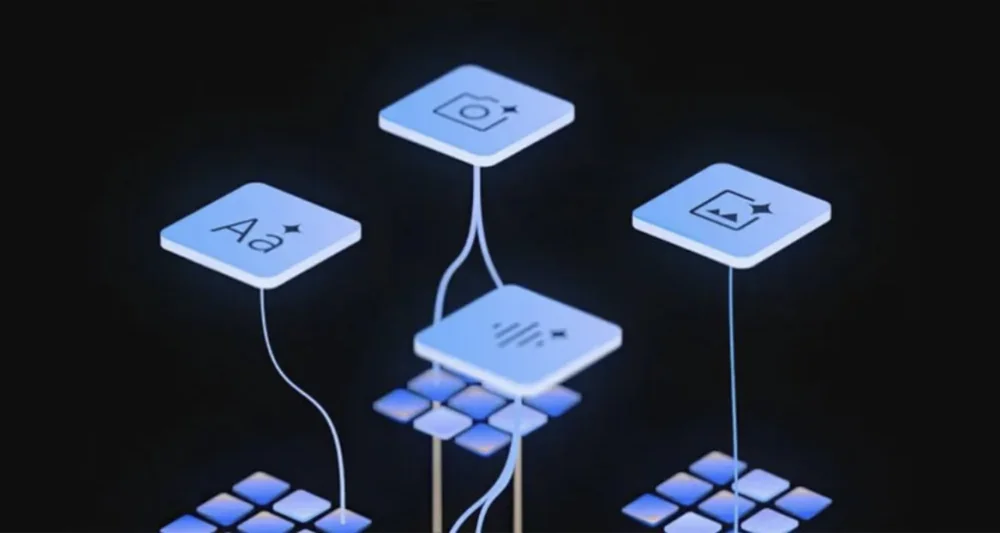

It is designed not only to enhance the previous Large Language Model (LLM) giant but also to offer entirely new capabilities in text, graphics, and audio processing.

So, let’s take a closer look at the new language model from Google, Gemini.

Read also: OpenAI’s Project Q: Breakthrough or Looming Threat?

What is Google Gemini?

This is a new and powerful artificial intelligence model from Google that can understand not only text but also images, videos, and audio. Described as a multimodal model, it is said to be capable of tackling complex tasks in mathematics, physics, and other fields. Additionally, it can comprehend and generate high-quality code in various programming languages.

Currently, Gemini is accessible through integration with Google Bard and Google Pixel 8 and will gradually be incorporated into other Google services.

“Gemini is the result of extensive collaborative efforts across Google teams, including our colleagues from Google Research,” said Dennis Hassabis, CEO and co-founder of Google DeepMind. “It was built from the ground up to be multimodal, meaning it can generalize and seamlessly comprehend, manipulate, and combine different types of information, including text, code, audio, images, and video.”

Read alsо: Microsoft Copilot: Game-Changer or False Path?

Who created the model?

You may have already guessed that Gemini was created by Google and Alphabet, Google’s parent company, and is the company’s most advanced AI model to date. Google’s DeepMind division has also made a significant contribution to its development. It is not yet known how many employees took part in the development and what funds were allocated for it, but knowing Google’s capabilities, we can be sure that it is a very tidy sum.

Read also: Windows 12: What will be the new OS

Capabilities

As mentioned earlier, it is a multimodal model, meaning it can comprehend, manipulate, and combine different types of data, including text, code, audio, images, and video. This provides enhanced capabilities for understanding, reasoning, and coding compared to previous AI systems.

The main capabilities of the model include:

- Natural language processing for tasks like translation, summarization, and dialogue.

- Mathematical thinking and problem-solving.

- Ability to generate code and documentation.

- Understanding images, audio, and video.

- Multitasking across various domains.

We see that its capabilities surpass other models.

In language understanding, mathematical thinking, and coding tests, Gemini Ultra outperformed models like GPT-4. Specifically, it is the first model to exceed human-level performance on the Massive Multitask Language Understanding (MMLU) test, achieving over 90% accuracy.

In 32 academic tests, the research on the large language model Gemini yielded better results than GPT-4. In 30 cases, Google’s new language model outperformed its competitor, showcasing the model’s capabilities in comprehensive language understanding.

Read also: Human Brain Project: Attempt to imitate the human brain

Are there different versions?

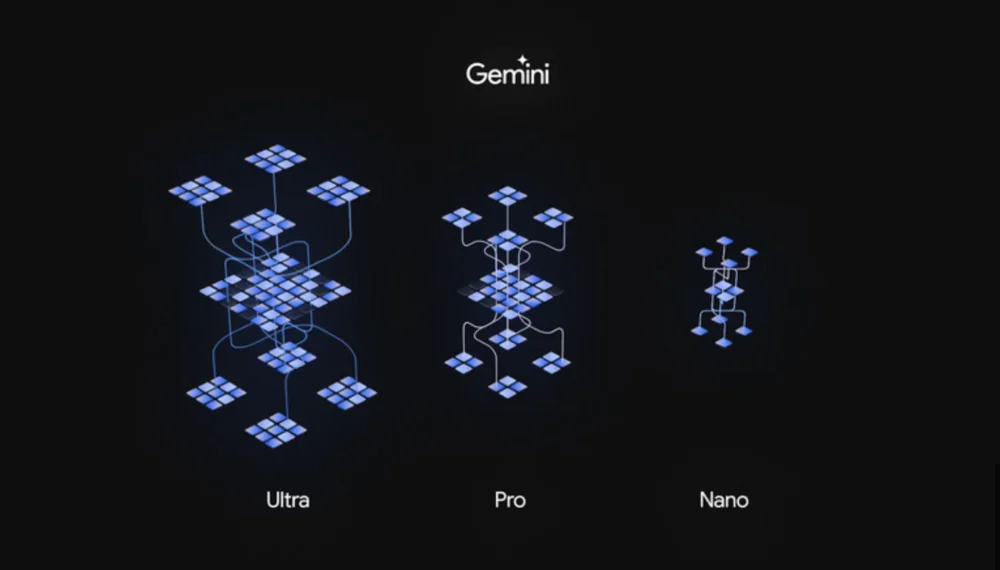

Google describes this AI as a flexible model capable of operating on any device, from Google’s data centers to mobile devices. To achieve this scalability, Gemini is released in three versions: Nano, Pro, and Ultra.

Let’s delve into the different versions of Gemini.

- Gemini Nano: Designed to operate on smartphones, notably the Google Pixel 8. It is crafted for tasks that demand efficient on-device AI processing without relying on external servers, such as suggesting responses in chat apps or summarizing text. This compact model for devices has around 6 billion parameters.

- Gemini Pro: Operates in Google data centers. Pro is designed to work with the latest version of the AI-powered chatbot Bard. It can provide quick response times and understand complex queries. This medium-sized model has approximately 100 billion parameters and serves as the core of the conversational AI Bard. Pro will be available through Google Cloud.

- Gemini Ultra: While the Ultra version is still unavailable for widespread use, Google describes it as its most efficient model, surpassing “current state-of-the-art results on 30 of 32 widely used academic benchmarks applied in Large Language Model (LLM) research.” As the largest and most powerful version, Ultra will have over 1 trillion parameters. It will be hosted in data centers and tailored for corporate use, designed to handle highly complex tasks. Google plans to release this version after completing the current testing phase, meaning the most powerful version is not yet available to users.

Read also: Google Bard AI: everything you need to know

How to access Gemini?

Google has made the AI available in the Nano and Pro versions in its products, such as the Google Pixel 8 smartphones and the Bard chatbot, respectively. Google plans to integrate it over time into its search engine, advertising, Gmail email service, Chrome browser, and other services.

Developers and corporate clients will be able to access Pro through the Gemini API on Google AI Studio and Google Cloud Vertex AI, starting from December 13, 2023. Android developers will have access to the Nano version of the model through AICore, available in an early preview version.

Read also: 7 coolest ways to use ChatGPT

Gemini in Google Bard: what will change?

According to the information provided during the presentation, the model will enable Google Bard to better tackle more complex problems, as stated:

“We designed Gemini to be naturally multimodal and pretrained on diverse modalities from the very beginning. We then fine-tuned it by adding more multimodal data to enhance performance. Thanks to this, Gemini understands and draws conclusions much better than previous multimodal models, boasting state-of-the-art capabilities in almost all domains.

The extraordinary multimodal capabilities of Gemini assist in comprehending complex textual and visual information. They prove particularly valuable when it comes to extracting specific information from vast datasets. The remarkable ability of this model to distill the essence of information from hundreds of thousands of documents by reading, filtering, and analyzing them will undoubtedly contribute to groundbreaking discoveries across various fields, from science to finance.”

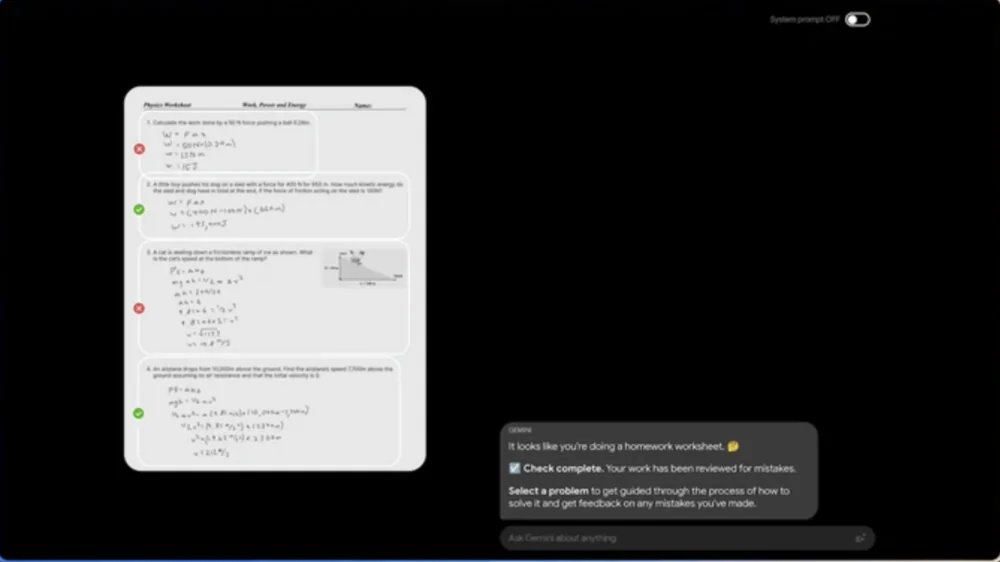

During the presentation, an example of a comprehensive study was showcased, containing over 200,000 records, some of which needed to be updated based on new data. As one might imagine, performing this task manually would have been extremely time-consuming, so the researchers applied Gemini to generate code that utilized input data and incorporated the necessary updates. Another, more practical example is the use of Google’s language model to explain tasks related to mathematics or physics.

The input data in this case consisted of photos/scans of a school lesson assignment. The system successfully processed the stored graphics and text, then indicated which parts of the homework were done correctly and which needed additional attention. The presentation demonstrated that users could repeatedly ask Gemini to explain the task, and each subsequent attempt required simpler language for explanation. The precision and accuracy of Gemini, of course, will be verified by those interested, but the ability to read and process text directly from photos is impressive. As mentioned during the presentation:

“Gemini has been trained to recognize and understand text, images, sounds, and much more simultaneously. This enables it to better grasp the nuances of information and respond to complex queries. It is particularly effective in explaining topics related to mathematics and physics, making it a valuable personal guide for homework assignments.”

Gemini in Google Pixel smartphones

“Google also boasted that Gemini was ‘trained’ on new TPUv5 chipsets and plans to introduce Gemini Ultra in early 2024, which will utilize Bard Advanced, a new version of the giant’s consumer-grade language model. Currently, Gemini Ultra is in the testing phase and is already available to select security experts.”

The latest important information is the integration of Gemini into Google Pixel 8 smartphones. This will allow, among other things, the creation of quick responses through the Gboard app in messengers. The first one to benefit is WhatsApp, but next year, such solutions will appear in other communication-related applications. However, this is just the beginning, as Google has announced many new AI tools for Pixel 8 smartphones, and in the future, they will be available on some other Android devices. However, these are future plans, and no details have been provided at the moment.

How does Gemini differ from other AI models, such as GPT-4?

The new Gemini model from Google seems to be one of the largest and most sophisticated artificial intelligence models to date, although the release of the Ultra model will definitely determine this. Compared to other popular models currently used in AI chatbots, Gemini stands out with its unique multimodal feature, while other models like GPT-4 rely on plugins and integration to truly be multimodal.

Concerns about accuracy and impartiality

While Gemini represents a significant leap in the development of artificial intelligence capabilities, it has its drawbacks, common to other large language models. Firstly, there is the potential for generating misinformation. Biases are also rooted in the training data available to the new language model. It’s also worth mentioning the limited understanding of the real world. Google acknowledges that the new Gemini model may make mistakes, provide facts not based on evidence, and contradict common sense.

Additional tests are needed, especially for Gemini Ultra, which has new capabilities that are not fully explored yet. Google aims to thoroughly assess Gemini to minimize potential harm.

Read alsо: The war for satellite internet: Europe challenges Starlink

Future with Gemini

The launch of Gemini by Google has initiated a new era in the development of AI. With its superior performance compared to previous models and human baseline benchmarks, Gemini points towards future possibilities of artificial intelligence. However, it still requires further research to address certain drawbacks.

In the future, we can expect Gemini to provide more useful and intelligent features in Google products. The company plans to continue expanding the capabilities of Gemini beyond English and build upon its foundational model methodology.

We can only watch and hope that Google knows what it’s doing.

Read also:

- What are neural networks and how do they work?

- Samsung Galaxy Flip5 vs Motorola Razr 40 Ultra: Battle of two yokozunas