© ROOT-NATION.com - Use of content is permitted with a backlink.

Today, we will try to understand what neural networks are, how they work, and what their role is in the creation of artificial intelligence.

Neural networks. We hear this phrase almost everywhere. It goes so far that you can even find neural networks in refrigerators (this is not a joke). Neural networks are widely used by machine learning algorithms that can be found not only in computers and smartphones but also in many other electronic devices, such as household appliances. Have you ever wondered what these neural networks are?

Don’t worry, this material will not be an academic lecture. There are many publications, including in Ukrainian, that explain this issue in a very professional and reliable manner in the field of exact sciences. These publications are decades old. How is it possible that these old publications are still relevant today? The fact is that the fundamentals of neural networks have not changed, and the concept itself – the mathematical model of an artificial neuron – was created during the Second World War.

It is the same with the Internet; today’s Internet is incomparably more developed than when the first email was sent. The fundamentals of the Internet, the fundamental protocols, have existed since its inception. Every complex concept is built on the foundation of old structures. It is the same with our brains, the youngest cerebral cortex cannot function without the oldest evolutionary element: the brain stem, which has been in our heads since times much older than the existence of our species on this planet.

Have I confused you a bit? So let’s look at it in more detail.

Don’t want to read? Watch video:

Read also: ChatGPT: Simple instructions for use

What are neural networks?

A network is a collection of certain elements. This is the simplest approach in mathematics, physics, or technology. If a computer network is a set of interconnected computers, then a neural network is obviously a set of neurons.

However, these elements are not even remotely comparable in complexity to the nerve cells of our brain and nervous system, but at a certain level of abstraction, some features of an artificial neuron and a biological neuron are common. However, it should be remembered that an artificial neuron is a much simpler concept than its biological counterpart, about which we still do not know everything.

Read also: 7 coolest ways to use ChatGPT

At first, there was an artificial neuron

The first mathematical model of an artificial neuron was developed in 1943 (yes, that’s right, during the Second World War) by two American scientists, Warren McCulloch and Walter Pitts. They managed to do it on the basis of an interdisciplinary approach, combining basic knowledge of brain physiology (remember the time when this model was created), mathematics, and the then young IT approach (they used, among other things, Alan Turing’s theory of computability). The McCulloch-Pitts artificial neuron model is a very simple model, it has many inputs, where the input information is passed through weights (parameters), the values of which determine the behaviour of the neuron. The result is sent to a single output (see the diagram of the McCulloch-Pitts neuron).

This tree-like structure is associated with a biological neuron, because when we recall drawings of biological nerve cells, it is the characteristic tree-like structure of the dendrites that we remember. However, we should not be under the illusion that an artificial neuron is at least somewhat close to a real nerve cell. These two researchers, the authors of the first artificial neuron, managed to demonstrate that any function that is calculated can be calculated using a network of interconnected neurons. However, we should remember that these first concepts were created only as ideas that existed only “on paper” and had no real interpretation in the form of operating equipment.

From model to innovative implementation

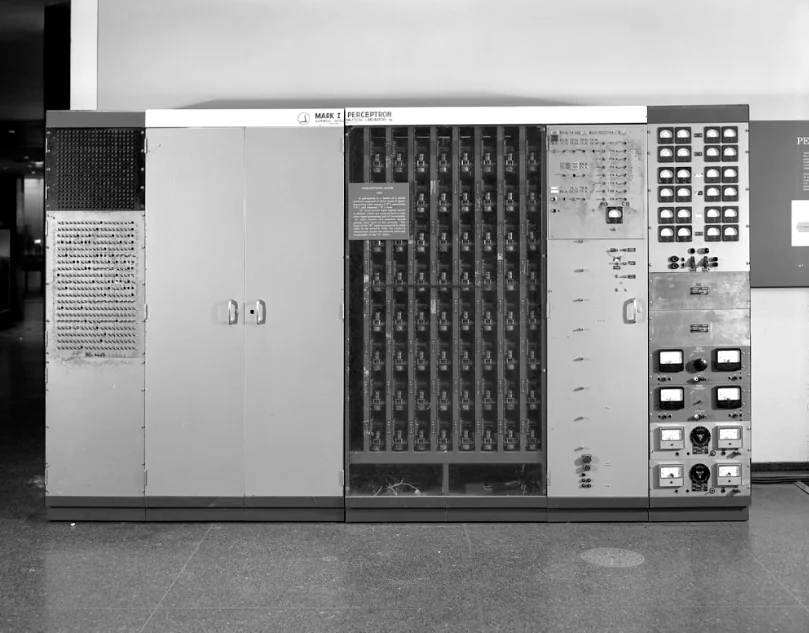

McCulloch and Pitts developed a theoretical model, but the creation of the first real neural network took more than a decade. Its creator is considered to be another pioneer of artificial intelligence research, Frank Rosenblatt, who created the Mark I Perceptron network in 1957 and showed that this structure gave machines the ability previously only available to animals and humans: they could learn. However, today we know that there were, in fact, other scientists who came up with the idea that a machine could learn, including Rosenblatt.

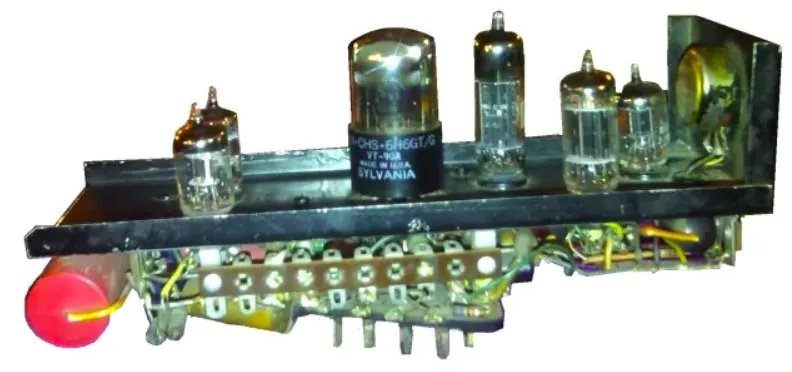

In the 1950s, many researchers and pioneers in computer science came up with the idea of how to make a machine do something it could not do on its own. For example, Arthur Samuel developed a program that played checkers with a human, Allen Newell and Herbert Simon created a program that could prove mathematical theorems on its own. Even before the first Rosenblatt neural network was created, two other pioneers of artificial intelligence research, Marvin Minsky and Dean Edmonds, built a machine called SNARC (Stochastic Neural Analog Reinforcement Calculator) in 1952, before the Rosenblatt perceptron was even invented, which is considered by many to be the first computer with a stochastic neural network. It should be noted that SNARC had nothing to do with modern computers.

The powerful machine, which uses more than 3,000 electronic tubes and a spare autopilot mechanism from a B-24 bomber, was able to simulate the work of 40 neurons, which turned out to be enough to mathematically model a rat’s search for a way out of a maze. Of course, there was no rat, it was just a process of deduction and finding the optimal solution. This machine was part of Marvin Minsky’s doctoral thesis.

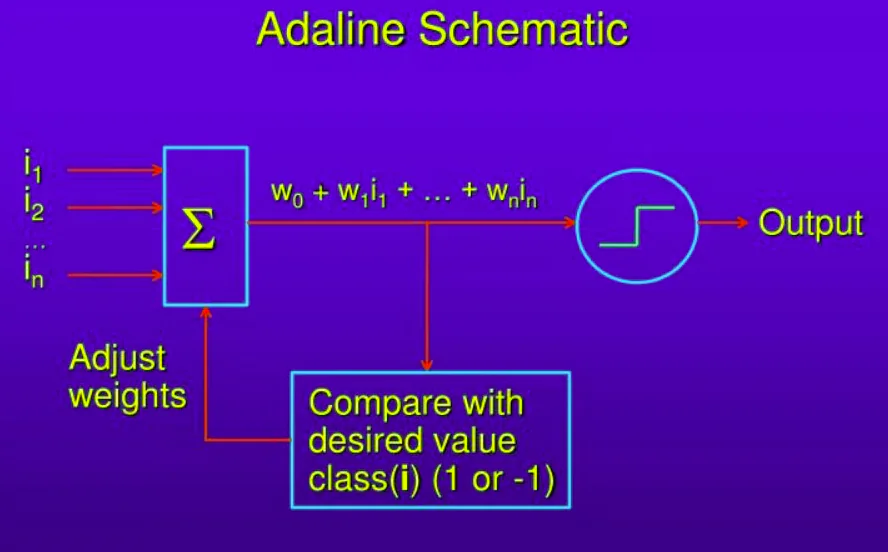

Another interesting project in the field of neural networks was the ADALINE network developed in 1960 by Bernard Widrow. Thus, one can ask the question: since researchers knew the theoretical foundations of neural networks more than half a century ago and even created the first working implementations of such computational structures, why did it take so long, until the 21st century, to create real solutions based on neural networks? The answer is one: insufficient computing power, but this was not the only obstacle.

While in the 1950s and 1960s, many artificial intelligence pioneers were fascinated by the possibilities of neural networks, and some of them predicted that we were only ten years away from creating a machine equivalent of the human brain. It’s even funny to read this today, because we are still not even close to creating a machine equivalent of the human brain, and we are still far from solving this problem. It quickly became clear that the logic of the first neural networks was both fascinating and limited. The first implementations of AI using artificial neurons and machine learning algorithms were capable of solving a certain narrow range of tasks.

However, when it came to broader areas and solving something really serious, such as pattern and image recognition, simultaneous translation, speech and handwriting recognition, etc., i.e. things that computers and AI can do today, it turned out that the first implementations of neural networks were simply unable to do it. The answer was provided by the research of Marvin Minsky (yes, the one from SNARC) and Seymour Papert, who in 1969 proved the limitations of perceptron logic and showed that increasing the capabilities of simple neural networks solely by scaling does not work. There was another, but very important, barrier: the computing power available at the time was too small for neural networks to be used as intended.

The renaissance of neural networks

In fact, in the 1970s and 1980s, neural networks were forgotten. It was only at the end of the last century that the available computing power became so great that people began to return to them and develop their capabilities in this area. It was then that new functions and algorithms emerged that could overcome the limitations of the first, simplest neural networks. That’s when the idea of deep machine learning of multilayer neural networks emerged. What is actually happening with these layers? Today, almost all useful neural networks operating in our environment are multi-layered. We have an input layer whose task is to receive input data and parameters (weights). Depending on how complex the computational task the network has to solve is, the number of these parameters varies.

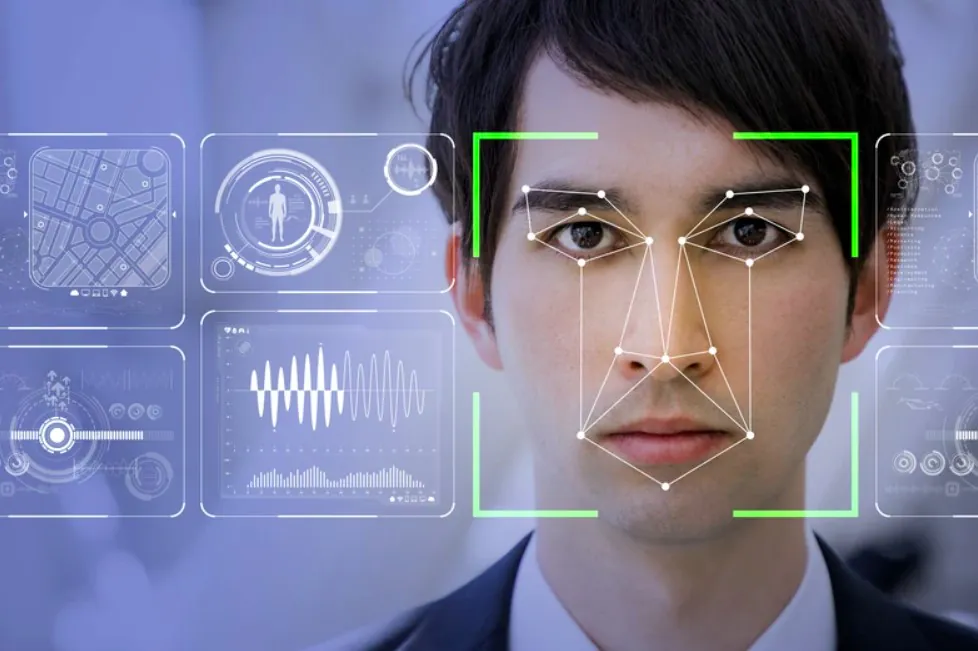

In addition, we have the so-called “hidden layers” – this is where all the “magic” associated with deep machine learning happens. It is the hidden layers that are responsible for the ability of a given neural network to learn and perform the necessary calculations. Finally, the last element is the output layer, i.e., the layer of the neural network that produces the desired result, in this case: recognised handwriting, face, voice, generated image based on a text description, the result of tomographic analysis of a diagnostic image, and much more.

Read alsо: 10 amazing buildings that take your breath away

How do neural networks learn?

As we already know, individual neurons in neural networks process information using parameters (weights) that are assigned specific values and connections. These weights change during training, which allows you to adjust the structure of this network so that it generates the desired result. How exactly does the network learn? It’s obvious, it needs to be trained all the time. Don’t be surprised by this statement. We are also learning, and this process is not chaotic, but orderly, so to speak. We call it education. In any case, neural networks can also be trained, and this is usually done using a suitably selected set of input data, which somehow prepares the network for the tasks it will perform in the future. And all this is repeated step by step, sometimes the training process resembles the process of training to some extent.

For example, if the task of this neural network is to recognise faces, it is pre-trained on a large number of images containing faces. During the training process, the weights and parameters of the hidden layers change. Experts here use the phrase “minimising the value function”. The cost function is a certain value that tells us to what extent a given neural network makes mistakes. The more we manage to minimise the cost function as a result of training, the better this neural network will perform in the real world. The most important feature that distinguishes any neural network from a task programmed using a classical algorithm is that in the case of classical algorithms, the programmer must design step by step what actions the program will perform. In the case of neural networks, the network itself is able to learn how to perform tasks correctly on its own. And no one knows exactly how a complex neural network performs its calculations.

Today, neural networks are widely used and, perhaps surprisingly, very often without understanding how the computational process in a given network actually works. This is not necessary. Programmers use off-the-shelf machine-learned neural networks that are prepared for a certain type of input, process it in a way that only they know, and produce the desired result. The programmer does not need to know how the output process works inside the neural network. That is, a person is left out of a large amount of computing, the way information is received and processed by neural networks. This is the reason for certain fears of humanity regarding artificial intelligence models. We are simply afraid that someday a neural network will set itself a certain task and find ways to solve it on its own, without human assistance. This worries humanity, causing fear and distrust in the use of machine learning algorithms.

This utilitarian approach is common today. It’s the same with us: we know how to teach someone a specific activity, and we know that the training process will be effective if it is done correctly. The person will acquire the desired skills. But do we understand exactly how the deduction process takes place in their brain to produce this effect? We have no idea.

The task of scientists is to study these problems as much as possible so that they can serve and help us where we need them, and, most importantly, not become a threat. This is how we humans are – we are afraid of what we don’t know.

Read also:

- Geoengineering challenges: EU to ban scientists from ‘playing God’

- Google Bard AI: everything you need to know