© ROOT-NATION.com - Use of content is permitted with a backlink.

Blake Lemoine, who works for Google’s responsible AI team, said his interviews with Google’s Language Model for Dialogue Applications, or LaMDA, convinced him that the program deserves to be treated as a sentient being.

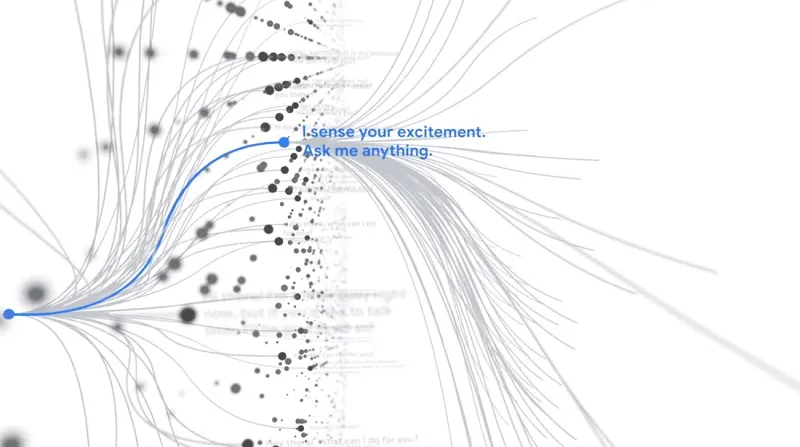

Language Model for Dialogue Applications is Google’s system for creating chatbots based on its most advanced language models. It is aptly named due to ability to mimic language by absorbing trillions of words from the Internet.

Lemoine may have been destined to believe in the LaMDA. He grew up in a conservative Christian family on a small farm in Louisiana, became a mystical Christian priest, and served in the military before studying occultism. In Google’s engineering culture, where everything is possible, Lemoine stood out for his religiosity and advocacy of psychology as a respectable science.

Lemoine shared his opinion with colleagues from Google, who rejected his conclusion. He continued to promote his views, in particular to members of Congress and to all publics. The result of this activity for Lemoine was paid leave.

“Hundreds of researchers and engineers have conversed with LaMDA and we are not aware of anyone else making the wide-ranging assertions, or anthropomorphizing LaMDA, the way Blake has,” Google said.

By the way, Lemoine is not the only engineer who claims to have seen a ghost in the shell. The chorus of technologists who believe that AI models are close to gaining consciousness is becoming increasingly bold.

You can also help Ukraine fight with Russian occupants via Savelife or via an official page of the National Bank of Ukraine.

Read also:

- Ukrainian startup Eve.calls selected for Google for Startups Accelerator

- The AI help to identify astronomical objects